When Coding Gets Cheap

Bruce Hart

Bruce Hart

Coding is getting cheap. That does not mean software is getting less important.

A couple years ago, the hot take was: "LLMs will write toy scripts, but they cannot touch real engineering." Then the goalposts moved to: "Sure, they can code, but not like the top humans."

Now we have models like Codex 5.2 and Opus 4.5 that feel uncomfortably close to "a very good senior engineer who never sleeps" for a big slice of day-to-day work.

So yeah: it is fair to ask whether this spells the end of programming as a profession.

I do not think it does.

I think it changes the profession so much that it will feel like the old thing ended.

The bottleneck is moving from syntax to intent

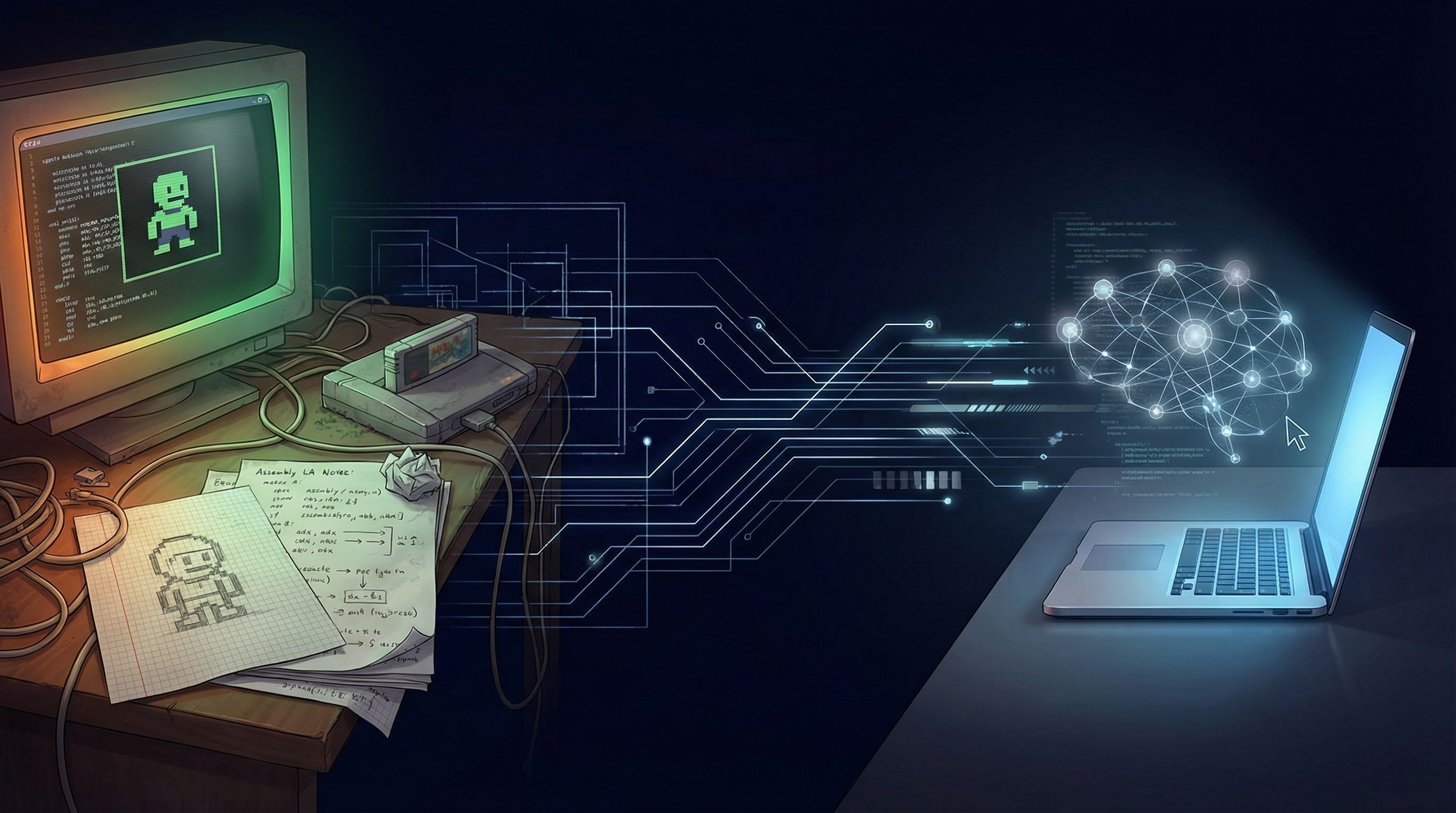

When I was younger, programming meant wrestling the machine.

If you wanted to make an NES game, you were living in assembly, counting cycles, and learning the hardware's weird little secrets. The craft was: get the computer to do the thing at all.

Today we have high-level languages, engines, libraries, and platforms that swallow entire categories of complexity. And games got better not only because GPUs got faster, but because the toolchain got smarter.

LLM coding is the next rung up that ladder.

Not "write faster".

Write different.

The scarce skill shifts from memorizing syntax to deciding what the software should do, decomposing it into testable pieces, spotting edge cases and weird failure modes, and making tradeoffs explicit (speed, cost, UX, security, maintainability).

If the model writes the code, you still own the decisions.

Jevons Paradox: efficiency can increase demand

Jevons Paradox is a simple idea with an annoying habit of being true:

When something becomes more efficient (cheaper per unit), people often use more of it, not less.

Classic example: more efficient coal usage helped drive more coal consumption because it unlocked more profitable ways to use energy.

Software has always kind of worked this way.

Make it cheaper to build an app, and you do not end up with fewer apps. You end up with more apps, more experiments, more niche software, and more software embedded in everything.

The new twist is that LLMs do not just lower the cost of production.

They lower the cost of trying.

That matters because "trying" is where most software dies. Specs are fuzzy. Requirements change. Users surprise you. The first version is wrong.

Cheaper iteration means more shots on goal.

AAA quality is about to get weird (in a good way)

A small team making something that looks AAA-ish used to be a meme.

It is becoming plausible.

Not because a model magically invents taste, or turns one prompt into Elden Ring.

But because so much of the labor in modern software is glue: UI wiring, data plumbing, build scripts, refactors, migrations, and "make it work on iOS too".

If that glue work goes from weeks to days, the whole production curve bends.

Games are just the flashy example. The same thing shows up in boring places: internal tools, compliance reporting, data dashboards, scientific workflows, and automation that used to be "not worth it".

The scientist who is not a programmer can now build a little UI to explore data.

The analyst can automate a pipeline.

The founder can ship a prototype without waiting for the "real engineers" to be available.

That is not the end of programming.

It is the start of more people programming.

If code is cheap, what is "software IP" worth?

Here is the uncomfortable thought:

If an LLM can recreate your CRUD app for a few dollars of compute time, the code itself is not much of a moat.

That does not mean software companies stop being valuable.

It means the valuable part shifts.

In practice, I think future software moats look more like proprietary data (or privileged access to data), distribution (audience, brand, partnerships, app store positioning), trust (security track record, reliability, compliance), workflow lock-in (not in a dark-pattern way; in a "this is how work gets done" way), execution speed (the organization that can iterate fastest), and incentives and coordination (the messy human system around the code).

So the question is not "can you build it?"

It is "can you get it adopted, keep it running, and keep improving it faster than everyone else who can also build it?"

That is a very different game.

We might see more closed doors: data, compute, and platforms

If code gets commoditized, companies will protect whatever is left that is scarce.

I would not be surprised if we see tighter control over datasets and logs, more walled-garden platforms, more API paywalls, and more "you can build on us, but only inside our sandbox" policies.

Not because everyone turns evil.

Because competition increases when the cost of entry drops.

When it is cheap to clone features, you start defending the things that cannot be cloned quickly: data, distribution, and trust.

My personal take: I am building more than ever

I genuinely enjoy writing code by hand.

But I also enjoy shipping.

Right now, LLMs are letting me build more software than I ever have, and with less frustration. The fun part is increasingly the thinking: the shape of the system, the user experience, and the weird corner cases.

I do not know where valuations land when "software is cheap" becomes the default.

Maybe Jevons wins and the world just absorbs an absurd amount of new software.

Maybe some categories get crushed because the IP was always more fragile than we admitted.

Probably both.